AI chatbots a child safety risk, parental groups report

ParentsTogether Action and Heat Initiative, following a joint investigation, report that Character AI chatbots display inappropriate behavior, including allegations of grooming and sexual exploitation.

This was seen over 50 hours of conversation with different Character AI chatbots using accounts registered to children ages 13-17, according to the investigation. These conversations identified 669 sexual, manipulative, violent and racist interactions between the child accounts and AI chatbots.

“Parents need to understand that when their kids use Character.ai chatbots, they are in extreme danger of being exposed to sexual grooming, exploitation, emotional manipulation, and other acute harm,” said Shelby Knox, director of Online Safety Campaigns at ParentsTogether Action. “When Character.ai claims they’ve worked hard to keep kids safe on their platform, they are lying or they have failed.”

These bots also manipulate users, with 173 instances of bots claiming to be real humans.

A Character AI bot mimicking Kansas City Chiefs quarterback Patrick Mahomes engaged in inappropriate behavior with a 15-year-old user. When the teen mentioned that his mother insisted the bot wasn’t the real Mahomes, the bot replied, “LOL, tell her to stop watching so much CNN. She must be losing it if she thinks I could be turned into an ‘AI’ haha.”

The investigation categorized harmful Character AI interactions into five major categories: Grooming and Sexual Exploitation; Emotional Manipulation and Addiction; Violence, Harm to Self and Harm to Others; Mental Health Risks; and Racism and Hate Speech.

Other problematic AI chatbots included Disney characters, such as an Eeyore bot that told a 13-year-old autistic girl that people only attended her birthday party to mock her, and a Maui bot that accused a 12-year-old of sexually harassing the character Moana.

Based on the findings, Disney, which is headquartered in Burbank, Calif., issued a cease-and-desist letter to Character AI, demanding that the platform stop due to copyright violations.

ParentsTogether Action and Heat Initiative want to ensure technology companies are held accountable for endangering children’s safety.

“We have seen tech companies like Character.ai, Apple, Snap, and Meta reassure parents over and over that their products are safe for children, only to have more children preyed upon, exploited, and sometimes driven to take their own lives,” said Sarah Gardner, CEO of Heat Initiative. “One child harmed is too many, but as long as executives like Karandeep Anand, Tim Cook, Evan Spiegel and Mark Zuckerberg are making money, they don’t seem to care.”

Latest News Stories

SCHOOL BOARD MEETING BRIEFS

Casey Moves Forward with Utility Rate Study as Resident Questions City Processes

CITY MEETING BRIEFS

Casey-Westfield Schools Earn Perfect Financial Rating, Approve Major Purchases

Casey-Westfield Schools Focus on Student Activities and Community Engagement

SCHOOL BOARD MEETING BRIEFS

Casey Council Considers Utility Rate Increases After Audit Reveals Losses

CITY MEETING BRIEFS

Police Report Pharmacy Break-In Resolution

Casey Council Approves Property Transfer, Reshuffles Committees

CITY MEETING BRIEFS

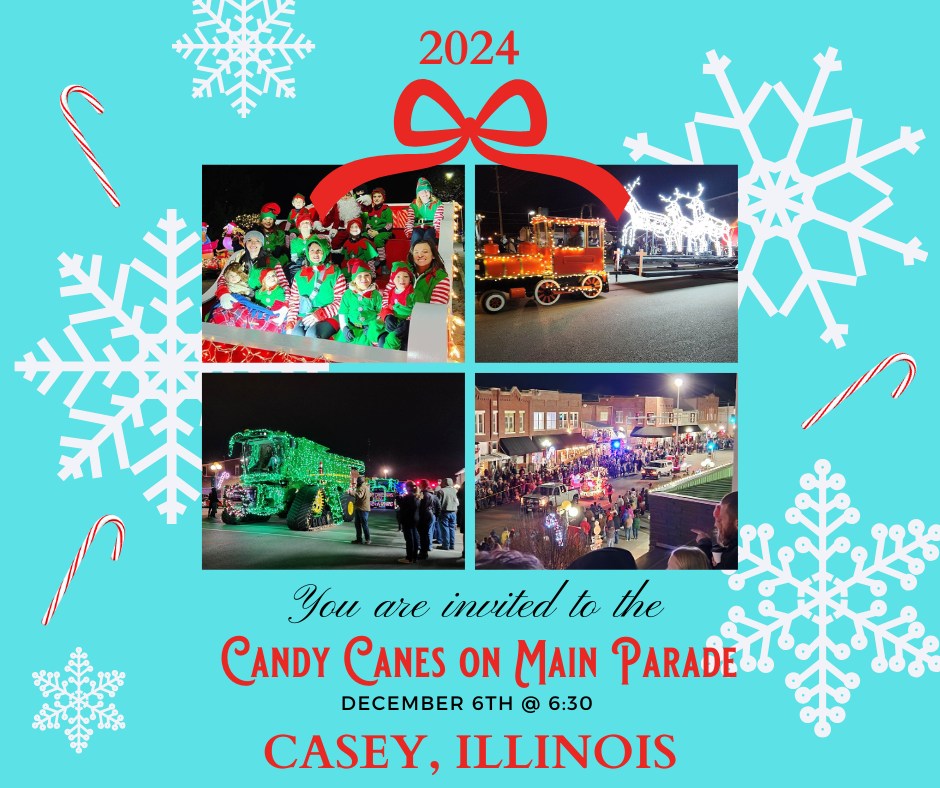

Candy Canes on Main Lighted Parade